At Ignite, Microsoft just announced Team customizations and imaging for Microsoft Dev Box. The goal for this feature is to improve developer productivity and happiness by reducing the time it takes to setup and maintain development environments. Team customizations began as an internal solution built by the One Engineering System (1ES) team called “ready to code” environments, and the benefits are a big part of the reason we have over 35,000 developers at Microsoft actively using Dev Box.

The original 1ES post covered how it all started and the amazing early results. Today we want to share some examples of how One Engineering System (1ES) built our “ready to code” environments and let you know how the preview feature for team customizations at Ignite will evolve to provide the same functionality directly in the Dev Box product.

For larger teams, representing these environments is extremely complex due to larger repositories, more tools (sometimes proprietary or legacy), and slower builds. Many customization steps are often similar across teams, but dedicating time and effort to create and maintain a sharable set of customizations is challenging. Making these customizations flexible enough to meet the needs of most teams in a large company like Microsoft is even harder. The 1ES solution is targeted at addressing these challenges, and we have worked very closely with the product team in developing team customizations based on what we learned. If you decide to use these examples you don’t need to worry about the future. As team customizations evolves and become public we will be migrating the 1ES deployment to Team customizations and will share a blog post on how you can do the same!

The biggest remaining differences between what was shared at Ignite and the 1ES approach shared in this post are 1ES templates conditional logic and image artifacts. Artifacts are effectively example scripts (or tasks in team customizations) on how to install and configure an environment built up from our CI/CD systems. The conditional logic in templates allow for very complex environments with a unique requirement but a common core to be represented with a much smaller and more maintainable script. Team customizations do support templates, and provide many of the same benefits:

- Enhanced Security: Leveraging Azure Managed Identity ensures secure access to necessary assets during image creation. All artifacts placed on the image come from approved sources and are validated.

- Improved Performance: Images are pre-configured with Dev Drive, configured with secure, performance-friendly Windows Defender settings.

- Consistency and Reliability: The template provides smart defaults for creating standardized environments, reducing discrepancies in experience within and across teams. This means fewer “Works on my machine” cases.

- Flexibility: Customizable to allow teams to specify repositories to clone, build configurations, default tool installations, and additional customizations.

- Ease of Maintenance: Azure Bicep‘s power for authoring image definitions allows mixing simple static declarations with logic, enabling code reuse while hiding the template’s complexity in Bicep modules provided by 1ES.

- Easy Refreshes: Microsoft developers are familiar with Azure Pipelines, and using them for managing image updates simplifies troubleshooting and maintenance.

- Centralized Improvements Delivery: Building Dev Box environments based on the template allows a central engineering team, like 1ES, to deliver improvements to Ready-To-Code environments across the company.

Across Microsoft, teams create hundreds of Ready-To-Code images using the 1ES Dev Box Image Template. This requires the 1ES team to rigorously test updates to the template. Before completing each pull request, a small set of test images is built to cover most template features. As the first step of each template release, a larger set of images is created to mimic real image definitions from many customers. To further minimize the impact of potential regressions, updates are released in phases, starting with internal dogfooding using 1ES-owned Dev Box images. We use the Bicep Module Registry to distribute modules of the 1ES Dev Box Image Template to Microsoft teams, relying on module path tags to differentiate release phases. These tags also facilitate the quick delivery of hotfixes to a subset of customers when necessary.

To extend the benefits of the 1ES Dev Box Image Template beyond Microsoft, this post is an effort to share our approach with the broader community. We have created a ready to use sample from our internal template that builds Ready-To-Code environments for several real-life open-source repositories. This sample uses Azure Image Builder, allowing you to rely on extensive online resources for service configuration and troubleshooting. By following a similar templatized approach, you can build images for all your other needs. Here are key parts of the sample:

- README.md: Detailed steps for how to set up your own image builds.

- MSBuildSdks: Very simple image definition for a dotnet repo

- eShop: More advanced Ready-To-Code image definition for a dotnet repo

- Axios: Sample Ready-To-Code image definition for NPM repo

- devbox-image: Main Bicep module for the sample template

- build_images.yml: Sample Azure DevOps pipeline definition for building images

- Artifacts: AKA customizers which are PowerShell script used for performing various image configuration tasks

The sample template offers the following key features:

- Git Repositories: Easily declare a set of repositories to clone, restore packages, build, and create desktop shortcuts. The template natively supports MSBuild/dotnet projects and installs the necessary SDKs.

- Image Identity: Automatically configure authentication using Azure Managed Identity for accessing Azure DevOps repositories and artifacts.

- Dev Drive: Simplifies the setup of Dev Drive on Dev Box images, hiding the complexities involved.

- Base Image: By default, the template uses Azure Marketplace image with Visual Studio 2022, Microsoft 365 Apps, and many common tools installed.

- Default Tools: Ensures that essential tools are available and properly configured on the image, including VSCode, Visual Studio with extensions, Sysinternals Suite, Git, Azure Artifacts Credential Provider, and WinGet.

- Smart Defaults: Optimizes performance and security for developer scenarios on Dev Box by configuring Windows OS, Microsoft Defender, enabling long paths, disabling Windows Reserved Storage and OneDrive.

- Image Chaining: Allows the creation of base images that can be used as a faster starting point for derived images.

- Compute Galleries: Publishes images to multiple compute galleries.

- Image Build Environment: Configures SKU and disk size for the VM used during image building.

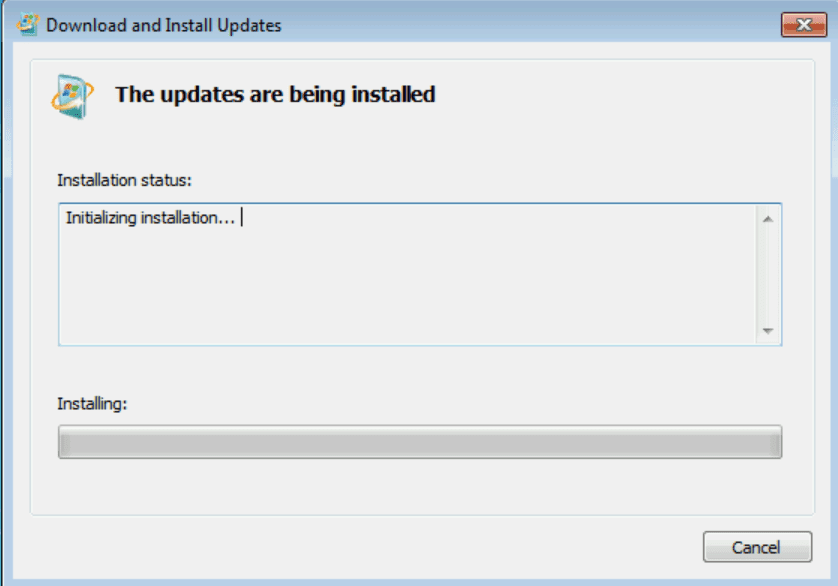

Below is a code snippet from the MSBuildSdks image definition. Despite its simplicity, this sample leverages the template to produce a Dev Box-compatible image, incorporating most of the features mentioned earlier. The repository is cloned and built seamlessly, thanks to the template’s comprehensive capabilities.

module devBoxImage '../modules/devbox-image.bicep' = {

name: 'MSBuildSdks-${uniqueString(deployment().name, resourceGroup().name)}'

params: {

imageName: imageName

isBaseImage: false

galleryName: galleryName

repos: [

{

Url: 'https://github.com/microsoft/MSBuildSdks'

Kind: 'MSBuild'

}

]

imageIdentity: imageIdentity

builderIdentity: builderIdentity

artifactsRepo: artifactsRepo

}

}

The 1ES Dev Box Image Template offers a great deal of flexibility, control, and troubleshooting options. However, this comes with a cost: teams are responsible for setting up and managing their image-building pipelines and need some knowledge of Azure. This could pose a steep learning curve for teams that lack experience with these technologies. The new Team customization feature shared at Ignite is much easier to get started with, but for larger organizations with more complex environments the benefits of the 1ES solution are significant. 1ES will continue to work closely with the Dev Box product group to integrate many of the 1ES Dev Box Image Template’s capabilities into the product itself, making the experience simpler while preserving as many benefits as possible and we are committed to helping our internal and external customer migrate when the time comes.

The post Dev Box Ready-To-Code Dev Box images template appeared first on Engineering@Microsoft.